MemSQL is a distributed SQL based Database Management System. On April 23, 2013, MemSQL launched its first generally available version of the database to the public.

In order to process highly volatile workloads, MemSQL combines lock-free data structures and just-in-time compilation (JIT). More specifically, MemSQL implements lock-free hash tables and lock-free skip lists in memory of fast random access to data. SQL queries sent to the MemSQL server are converted into byte code and compiled through LLVM into machine code. Queries are then stripped of their parameters and the query template is stored as a shared object, which is subsequently matched against incoming queries. Executing pre-compiled query plans removes interpretation along hot code paths, providing highly efficient code paths that minimize the number of central processing unit (CPU) instructions required to process SQL statements.

MemSQL can store database tables either as row stores or column stores. The format used is determined by the user as DDL time (i.e. when the table is created). Data for all row store tables is stored completely in-memory, with snapshots and transaction logs persisted to disk. Data for all column store tables is stored on-disk, using a row store-like structure to handle incoming inserts into the column store

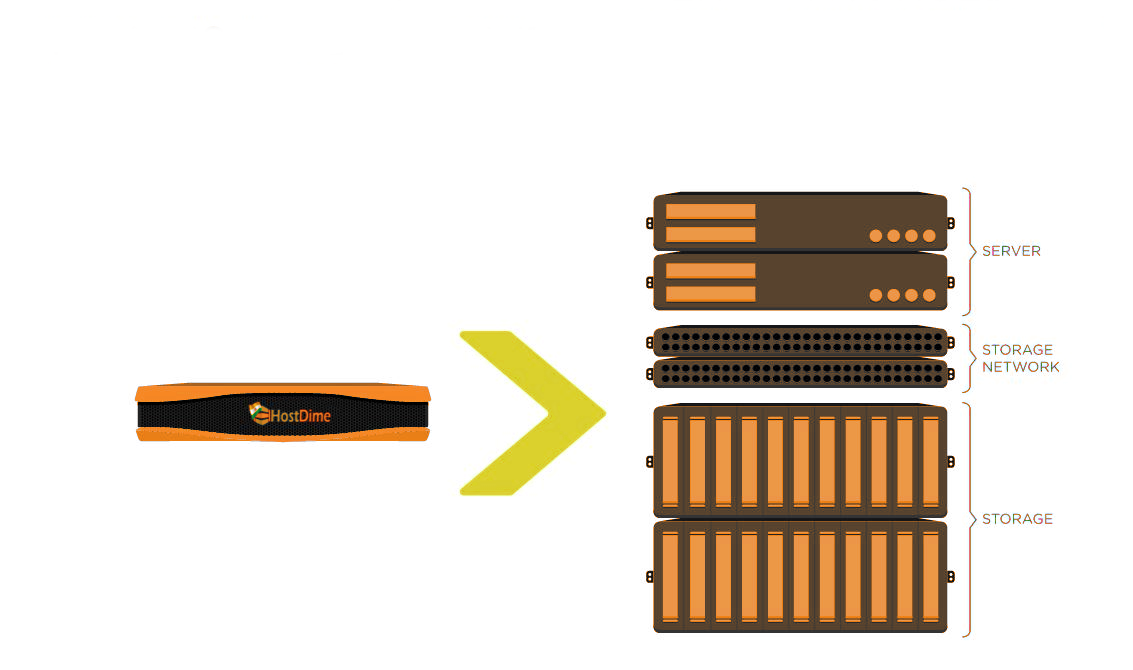

A MemSQL database is a distributed database implemented with aggregators and leaf nodes. MemSQL binaries used for aggregator and leaf nodes are nearly the same, with the only difference being the user identifying the node as an aggregator or leaf. An aggregator is responsible for receiving SQL queries, breaking them up across leaf nodes, and aggregating results back to the client. A leaf node stores MemSQL data and processes queries from the aggregator. All communication between aggregators and leaf nodes is done over the network through SQL syntax. MemSQL uses hash partitioning to distribute data uniformly across the number of leaf nodes.

MemSQL durability is slightly different for its in-memory row store and an on-disk column store. Durability for the in-memory rowstore is implemented with a write-ahead log and snapshots, similar to checkpoints. With default settings, as soon as a transaction is acknowledged in memory, the database will asynchronously write the transaction to disk as fast as the disk allows. The on-disk column store is actually fronted by an in-memory row store-like structure (skiplist). This structure has the same durability guarantee as the MemSQL row store. Apart from that, the column store is durable since its data is stored on disk.

A MemSQL cluster can be configured in “High Availability” mode, where every data partition is automatically created with master and slave versions on two separate leaf nodes. In High Availability mode, aggregators send transactions to the master partitions, which then send logs to the slave partitions. In the event of an unexpected master failure, the slave partitions take over as master partitions in a fully online operation.

MemSQL ships with an installation, management and monitoring tool called MemSQL Ops. When installing MemSQL, Ops can be used to set up the distributed MemSQL database across machines, and provide metrics about the running system. MemSQL Ops has both a web user interface and command line interface. Starting with MemSQL 4.1, launched in September 2015, MemSQL gives users the ability to install Apache Spark as part of the MemSQL cluster, and use Spark as an ETL tool to import data into MemSQL. Apache Spark is installed and set up interactively using MemSQL Ops. Ops users can then define the extract, transform, and load phases of their data pipeline to import data into MemSQL. Management and monitoring of running data pipelines can be done within the Ops UI. MemSQL is flourishing on the birth of new technology.